Therabot, Dartmouth’s AI therapy chatbot, demonstrated significant mental health benefits in a clinical trial, reducing depression symptoms by 51% and anxiety symptoms by 31%, underscoring its potential as a scalable mental health support tool.

With only one mental health provider available for every 1,600 patients with depression or anxiety in the U.S., access to care remains a significant challenge, researchers note. Therabot’s success highlights the potential of AI-assisted therapy to help fill this gap by providing wider, more scalable support. While not a replacement for human therapists, the chatbot could serve as an accessible alternative for those unable to receive traditional care.

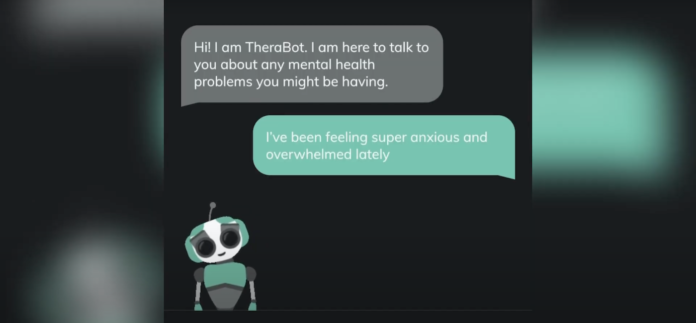

How Therabot provides real mental health benefits

Dartmouth researchers found that their AI therapy chatbot reduced depression, anxiety, and eating disorder symptoms in a clinical trial with 106 participants. Depression symptoms dropped by 51%, anxiety by 31%, and eating disorder concerns by 19%, outperforming the control group. “These improvements are comparable to traditional outpatient therapy,” said lead researcher Nicholas Jacobson.

Therabot engages users in open-ended conversations with cognitive behavioral therapy techniques, automatically prompts 911 calls for high-risk content, and provides support anywhere.

Co-author Tor Wager Heinz warned that no generative AI is ready for full autonomy, stressing the need for rigorous safety research before broader adoption.

Ethical concerns about using AI for mental health care

While Therabot shows promise, concerns remain over AI for mental health. A study from OpenAI and MIT Media Lab found that chatbots, especially voice-based ones, initially eased loneliness but led to greater isolation and emotional dependence with heavy use. Researchers warn AI could blur the line between support and reliance, raising serious ethical questions.

Further complicating the issue, research from Yale University and the University of Zurich unveiled that AI chatbots may exhibit stress-like responses when discussing trauma. Ziv Ben-Zion, a Yale researcher, observed that ChatGPT presented anxiety and bias when exposed to distressing prompts. While mindfulness exercises helped stabilize its responses, this raises concerns about consistency and reliability in handling sensitive mental health discussions.

Is AI in mental health a helping hand or more of a risk?

With the mental health crisis surging and the shortage of providers, artificial intelligence is filling some of these gaps. But as studies uncover both its benefits and risks of using AI for mental health care, the question remains: Can we afford to rely on AI tools for support, or is the human connection still irreplaceable?

Read our guide to navigating the ethical challenges of generative AI to learn more.